Why Chatbots Can’t Solve the Loneliness Epidemic

AI companions may temporarily ease loneliness—but they won’t fix the deeper civic disconnect that defines our era.

The Allure of AI Companionship

What can cure our loneliness epidemic? Some are using chatbots for companionship. From Replika to ChatGPT, AI companions are being marketed as emotional supports—therapists, friends, and digital confidants. The idea is simple and seductive: if people are lonely, maybe machines can help fill the gap.

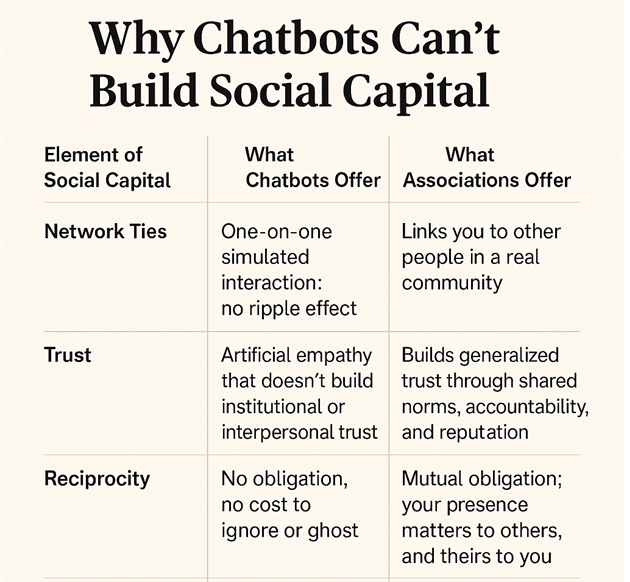

But the loneliness epidemic is a function of our loss of social capital, and chatbots can't help restore our associational life and the trust and reciprocity that flow from it. It’s like taking a painkiller that doesn’t cure the disease.

What Loneliness Really Is

But loneliness isn't just about being without someone. It's about being disconnected from a meaningful place in a shared social world.

In political science and sociology, this is often understood through the lens of social capital: the web of reciprocal relationships, shared norms, and mutual obligations that bind people to their communities. It's what you gain from a neighborhood association, a church, a union hall, or a bowling league—not from a chatbot. Connection fosters trust, and trust, in turn, creates the most essential element for thriving societies: reciprocity.

Chatbots Offer Connection Without Embedding

A chatbot can simulate empathy, mirror your thoughts and feelings, and make you feel heard, but it cannot place you in a broader web of civic life.

When you talk to a chatbot, nothing ripples outward. No one else becomes aware of you. No shared norms are reinforced. You gain a sense of comfort but not the civic trust or social reciprocity that characterizes real-world community.

In short: you feel seen, but you're still alone.

Why This Distinction Matters

Social capital isn't just a warm feeling—it's a political and social resource. It's what motivates people to vote, help a neighbor, join a PTA, and trust others.

When synthetic relationships are substituted for embedded ones, you risk creating a society of people who feel less anxious but remain atomized.

AI companions may dull the pain of isolation, but they don’t fix the underlying fracture: the loss of shared spaces, institutions, and habits of mutual engagement.

What We Should Watch For

- Emotional dependency without civic reinforcement

- Declining real-world participation as AI companions become more satisfying

- A generation socialized to believe comfort is a substitute for community

- Political passivity in exchange for psychological regulation

Final Thought

The loneliness epidemic isn’t just about individuals. It’s about institutions—and our place within them.

Chatbots may offer companionship, but they won’t rebuild the bonds that hold democratic societies together.

That will still require people, places, rituals, and the messy work of being part of something larger than ourselves.

Sean Richey is a researcher focused on political communication, emerging technologies, and the intersection of AI and society.